Solar flares are caused by eruptions of the magnetic field near the sun’s surface that send out a blast of charged particles and intense electromagnetic radiation. The flares occasionally interfere with radio communication and electrical lines, but also play a role in the creation of the beautiful aurorae (the northern and southern lights).

Writing in Physical Review Letters, Shreekrishna Tripathi and Walter Gekelman, at the University of California, Los Angeles, US, describe their efforts to understand certain types of magnetic flux eruptions in the solar atmosphere by creating and imaging similar bursts in a laboratory-scale plasma chamber.

Tripathi and Gekelman focus on reproducing what are called “arched magnetic flux ropes,” literal arcs of magnetic flux on the sun’s surface that keep plasma confined for up to days at a time, before erupting. Within the confines of a 4-m-long cylindrical chamber that contains an ambient plasma, they create an arched magnetic field (using two electromagnets) and generate a second plasma that is confined by this magnetic field. The arched magnetic field and the plasma it confines remain stable until two lasers ablate carbon targets near each of the arc’s feet, sending two jetlike blasts of positively charged carbon—roughly 800 amperes—into the flux rope. The current produces its own magnetic field, creating a destabilizing kink in the flux rope that causes it to erupt with a wave of energy.

Tripathi and Gekelman’s images of the outward wave of plasma following the eruption provide a rare, albeit scaled down, glimpse of how such solar events evolve in time. – Jessica Thomas

The supreme task of the physicist is to arrive at those universal elementary laws from which the cosmos can be built up by pure deduction. There is no logical path to these laws; only intuition, resting on sympathetic understanding of experience, can reach them

Tuesday, August 31, 2010

Producing fake solar flares in lab

confined water

The properties of water under conventional conditions are largely known to scientists. But those under unusual cases are rarely revealed. One example is, what happens to the viscosity and elasticity of water confined to two solids in thin layer of nanometer? According to a recent study[1], there may happen a solid-like transition with respect to the rate at which the two solids approach each other, that is, elasticity increases while viscosity decreases.

The properties of water under conventional conditions are largely known to scientists. But those under unusual cases are rarely revealed. One example is, what happens to the viscosity and elasticity of water confined to two solids in thin layer of nanometer? According to a recent study[1], there may happen a solid-like transition with respect to the rate at which the two solids approach each other, that is, elasticity increases while viscosity decreases.[1]Phys. Rev. Lett. 105, 106101 (2010)

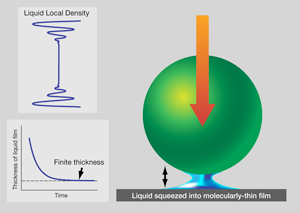

Schematic illustration of a confined fluid. Imagine that a liquid droplet is placed between a ball and a flat surface, and a ball is allowed to fall (right panel) onto it. When the thickness of the liquid is plotted schematically against time after the ball begins to fall, the film thickness remains finite at equilibrium (bottom left panel). This is because fluid tends to layer parallel to the solid surfaces. When the local liquid density is plotted against the distance between the solid boundaries, it shows decaying oscillations with a period of about a molecular dimension (top left panel). When these density waves shown in the bottom panel come sufficiently close to interfere with one another, the liquid can support force at equilibrium.

Few body results gives hints for many body ones

Often the few-body and the many-body worlds are, figuratively speaking, worlds apart. For example, the binding energies of the helium-4 dimer and the helium-4 trimer are about 0.001 K and 0.1 K, respectively [1]. The binding energy per particle Eb in the large particle limit, i.e., the binding energy per particle of the homogeneous or bulk system, in contrast, is much larger: Eb≈7 K [2]. This suggests that the binding energy of the bulk system cannot be predicted on the basis of just the two-particle and the three-particle energies. Indeed, it is found that a quantitatively correct prediction of the binding energy of the bulk requires knowledge of the energies of clusters with up to hundreds of atoms [2].

In an elegant sequence of papers, the first published in 2009 in Physical Review Letters [3] with follow-ups now appearing in Physical Review A [4] and Physical Review B [5], Xia-Ji Liu, Hui Hu, and Peter Drummond of the Swinburne University of Technology in Melbourne, Australia, have predicted the thermodynamic properties of two- and three-dimensional two-component Fermi gases down to unexpectedly low temperatures, purely on the basis of the solutions of just the two-body and the three-body problem. A detailed theoretical analysis, together with an analysis of a set of impressive experimental data for three-dimensional gases [6, 7], provides strong evidence that the approach pursued by Liu and co-workers correctly describes the key physics in a quantitative way. Given the above helium example, though, it seems counterintuitive that knowledge of the two-body and three-body energy spectra is all that is needed. Viewed from this perspective, the work by Liu and co-workers is a beautiful contribution that bridges the few-body and the many-body worlds. Furthermore, it makes an important leap toward determining the equation of state of two-component Fermi gases at any temperature, any interaction strength, and any dimensionality.

The basic constituents of two-component gases are fermionic atoms, such as lithium-6 or potassium-40, in two different hyperfine states. The mixture of atoms can be thought of as a pseudo-spin-1/2 system, where atoms in one hyperfine state are considered “spin up” and those in the other hyperfine state are “spin down.” Liu and co-workers [3, 4] assume a 50-50 mixture of spin-up and spin-down atoms, with equal masses, in three spatial dimensions. An intriguing feature of ultracold atomic gases is their large de Broglie wavelength λ=h/p, where h denotes Planck’s constant and p the momentum. In practice, the de Broglie wavelength is increased by decreasing the sample’s temperature T (λ∝1/√T). As is well known from textbook statistical mechanics [8], a Bose gas undergoes a transition to a unique state of matter, a Bose-Einstein condensate, when the de Broglie wavelength becomes of the order of the interparticle spacing. In fermionic systems, however, the situation is different. Because of the Pauli exclusion principle, the atomic Fermi gas cannot undergo condensation like a Bose gas but instead becomes Fermi degenerate when the de Broglie wavelength becomes sufficiently large. Below the degeneracy temperature TF, nearly all available energy levels are filled [as indicated in the cartoon in Fig. 1(a)].

Currently, a major quest in the field of cold atom physics is the accurate determination of finite-temperature thermodynamic quantities such as the energy, entropy, and chemical potential. Possibly the most straightforward approach to obtain these observables is to simulate the N-body problem. Of course, any such treatment requires integrating over 3N degrees of freedom, a task that can, in general, only be performed through Monte Carlo sampling. For fermions, however, Monte Carlo approaches are, except for a few fortuitous exceptions, plagued by the so-called sign problem [9], posing severe limitations on the applicability of this approach. Alternatively, one might think of employing an approach that expands around a small parameter, as did Liu and co-workers: They pursued a cluster, or virial, expansion approach [8], which treats the N-body problem in terms of one-body, two-body, three-body and so on clusters or subsystems. In particular, the thermodynamic potential Ω, Ω∝Σnbnzn (here, n labels the cluster), is expanded in terms of the fugacity z, which is a small parameter at large T but increases with decreasing T [see Fig. 1(c)]. The expansion in terms of z is equivalent to an expansion in terms of the “diluteness parameter” ρλ3 [8], where ρ is the density and λ the de Broglie wavelength, which increases with decreasing T. The nth virial coefficient bn is determined by the energy spectrum of the nth cluster; naturally, the determination of the virial coefficients becomes significantly more involved as n increases. At low T (large z), where only the lowest energy levels are filled [Fig. 1(a)], one needs the lowest portion of the energy spectrum of many clusters. At high T (small z), where more of the higher energy levels are occupied [Fig. 1(b)], one needs a large portion of the energy spectrum of just the smallest clusters. A natural question is thus how many clusters, and how much of their energy spectrum, are needed to describe the particularly interesting regime in the vicinity of the temperature where the Fermi gas becomes degenerate.

Liu and co-workers found that it is sufficient to treat the two-body and the three-body problem [3, 4], that is, the second and third terms in the virial expansion. To elucidate how to calculate the energy spectra of the two-body and three-body systems, let us return to the de Broglie wavelength. The de Broglie wavelength not only determines the degeneracy temperature of Bose and Fermi gases, but also sets a “resolution limit” when two atoms collide. Much like in a light microscope, two colliding atoms can only probe those features of the underlying interaction potential that are of the order of, or larger than, the de Broglie wavelength. This is a key ingredient to Liu and co-workers’ successful treatment. In the temperature regime of interest, “head-on” s-wave collisions between spin-up and spin-down atoms dominate and higher partial wave contributions are strongly suppressed [10]. Motivated by the microscope resolution analogy, these s-wave collisions can be treated by replacing the true atom-atom interaction potential by a simple short-range boundary condition on the wave function, which encapsulates the “net effect” of the true atom-atom potential. This replacement is designed to leave the low-energy atom-atom phase shift, which determines many observables of trapped cold atom gases, unchanged. However, it eliminates all the unwanted high-energy physics, thereby tremendously simplifying the theoretical treatment of the problem. Interestingly, these ideas go back to Fermi’s groundbreaking 1934 paper on the scattering between slow neutrons and bound hydrogen atoms [11].

Replacing the true atom-atom interactions by properly chosen boundary conditions, the quantum mechanical two-particle and three-particle systems become soluble. As has been shown in Busch et al.’s seminal work [12], the determination of the two-particle energy spectrum reduces to finding the roots of a simple transcendental equation. The determination of the three-body energy spectrum is more involved and several approaches exist. Among these, the approach put forward by Kestner and Duan [13], and subsequently refined by Liu and co-workers [3, 4], is particularly appealing since it allows for an efficient and accurate determination of essentially the entire energy spectrum for any interaction strength. Equipped with the two-body and the three-body energy levels, Liu and co-workers calculated the virial coefficients b2 and b3, and determined—using the thermodynamic potential Ω—observables such as the entropy for large samples of trapped two-component Fermi gases as a function of the temperature.

Building upon the results by Liu and co-workers, Salomon’s group extracted the fourth-order virial coefficient from experimental data [7]. This analysis confirmed that much of the thermodynamics is determined by the second and third virial coefficients, at least down to temperatures around the degeneracy temperature.

The work by Liu and co-workers beautifully demonstrates how accurate solutions of the few-body problem also provide a great deal of insight into the many-body problem. The work has already had a profound impact on determining the equation of state of s-wave interacting Fermi gases in three dimensions. Moreover, the framework has been extended to predict thermodynamic properties of strictly two-dimensional Fermi gases [5], which can be realized experimentally with present-day technology. It would be interesting to extend the treatment of Liu and co-workers to systems that live in mixed dimensions [15], where, say, the spin-up atoms move in three spatial dimensions and the spin-down atoms move in two spatial dimensions. In such a system, the spin-up atoms move freely in the third spatial dimension and interact with the spin-down atoms only when they pass through the plane in which the spin-down atoms live. Finally, another open challenge is the finite-temperature treatment of two-component Fermi gases with unequal masses. For these problems, as well as others, Liu et al.’s calculations should provide a solid jumping off point.

References

- see, e.g., B. D. Esry, C. D. Lin, and C. H. Greene, Phys. Rev. A 54, 394 (1996); R. E. Grisenti, W. Schöllkopf, J. P. Toennies, G. C. Hegerfeldt, T. Köhler, and M. Stoll, Phys. Rev. Lett. 85, 2284 (2000).

- V. R. Pandharipande, J. G. Zabolitzky, S. C. Pieper, R. B. Wiringa, and U. Helmbrecht, Phys. Rev. Lett. 50, 1676 (1983).

- X.-J. Liu, H. Hu, and P. D. Drummond, Phys. Rev. Lett. 102, 160401 (2009).

- X-J. Liu, H. Hu, and P. D. Drummond, Phys. Rev. A 82, 023619 (2010).

- X-J. Liu, H. Hu, and P. D. Drummond, Phys. Rev. B 82, 054524 (2010).

- L. Luo, B. Clancy, J. Joseph, J. Kinast, and J. E. Thomas, Phys. Rev. Lett. 98, 080402 (2007).

- S. Nascimbene, N. Navon, K. J. Jiang, F. Chevy, and C. Salomon, Nature 463, 1057 (2010).

- see, e.g., and D. A. McQuarrie, Statistical Mechanics (University Science Books, Sausalito, 2000)[Amazon][WorldCat].

- M. Troyer and U.-J. Wiese, Phys. Rev. Lett. 94, 170201 (2005).

- H. A. Bethe, Phys. Rev. 47, 747 (1935); E. P. Wigner, Phys. Rev. 73, 1002 (1948).

- E. Fermi, Nuovo Cimento 11, 157 (1934).

- T. Busch, B.-G. Englert, K. Rzazewski, and M. Wilkens, Found. Phys. 28, 549 (1998).

- J. P. Kestner and L.-M. Duan, Phys. Rev. A 76, 033611 (2007).

- G.-B. Jo, Y.-R. Lee, J.-H. Choi, C. A. Christensen, T. H. Kim, J. H. Thywissen, D. E. Pritchard, and W. Ketterle, Science 325, 1521 (2009).

- Y. Nishida and S. Tan, Phys. Rev. Lett. 101, 170401 (2008); G. Lamporesi, J. Catani, G. Barontini, Y. Nishida, M. Inguscio, and F. Minardi, Phys. Rev. Lett. 104, 153202 (2010).

About the Author

Doerte Blume

Doerte Blume received her Ph.D. in 1998 from the Georg-August-Universität Göttingen, Germany. Her Ph.D. research on doped helium droplets was conducted at the University of California, Berkeley, and the Max-Planck Institute for Fluid Dynamics, Göttingen. After a postdoc at JILA, Boulder, she joined the physics faculty at Washington State University in 2001. Her current research efforts are directed at the theoretical description of atomic and molecular Bose and Fermi systems.

Thursday, August 26, 2010

Black hole theory relevant to non-fermi liquid problem ?

Fermi liquid theory explains the thermodynamic and transport properties of most metals. The so-called non-Fermi liquids deviate from these expectations and include exotic systems such as the strange metal phase of cuprate superconductors and heavy fermion materials near a quantum phase transition. We used the anti–de-Sitter/conformal field theory correspondence to identify a class of non-Fermi liquids; their low-energy behavior is found to be governed by a nontrivial infrared fixed point, which exhibits nonanalytic scaling behavior only in the time direction.

For some representatives of this class, the resistivity has a linear temperature dependence, as is the case for strange metals.

SCIENCE VOL 329:1043 (2010)

coherent motions of excitons in AsGa

Because of the correlations between electrons and holes, photoexcitation results in the formation of collective electronic states that, in turn, contribute significantly to phenomena such as nonlinear optical properties, optical gain in laser media, the dynamics of highly excited states and the production of ‘entangled’ photons. Although the authors’ experiments2 indicate the presence of correlations (Fig. 1), do they reveal how the details or implications of such correlations relate to these kinds of phenomena? It seems not. The energy shifts recorded in the experiments

(the binding energies of biexcitons and triexcitons) arise from a combination of two major effects. The first, which probably dominates, is explained by theories such as the Hartree–Fock method. These theories evaluate the average electron–hole attractions, electron–electron (hole–hole) repulsions and quantum-mechanical exchange corrections to these Coulomb interactions by assuming that the charges are spread out in space according to the probability that the particle could be found there. The second effect, called electron correlation in the quantum-chemical literature7, describes the fascinating way in which electrons and holes tend to coordinate their motions to minimize repulsions. For example, two electrons

might move in such a fashion that they avoid crossing paths. The complexity of this problem scales steeply with the number of particles involved. Although current investigations into many-body effects provide an important first step to understanding how groups of carriers interact collectively, they cannot quantify the average Coulomb repulsions and attractions relative to how these are modified by correlations in multiparticle motions.

Turner, D. B. & Nelson, K. A. Nature 466, 1089–1092

Wednesday, August 25, 2010

Surface states

Introduction to surface states:

[1]http://philiphofmann.net/surflec3/surflec015.html

[2]http://en.wikipedia.org/wiki/Surface_states

Monday, August 23, 2010

The 5/2 engima in FQHE

larger mystery surrounding the fractional quantum Hall effect in the second Landau level. The other observed fractions, such as 2 + 1/3, 2 + 2/3, 2 + 2/5, 2 + 3/5, match those observed in the lowest Landau level, but detailed calculations show, surprisingly, that the model of weakly interacting composite fermions, which is successful for the explanation of the lowest Landau level fractions, is not adequate for these second Landau level fractions. A resolution of the second Landau level fractional quantum Hall effect is likely to lead to much exciting physics.PRL 105, 096801 (2010)

US sets dark things as cosmic priorities

Over ten years, the US$465-million observatory will also build up an unprecedented 100-petabyte database for astronomers trying to discern the nature of two mysterious factors that shape the Universe. One is dark matter, thought to be an unknown particle or family of particles beyond the standard model of physics. Hidden in vast quantities among the galaxies, dark matter generates a gravitational pull that has shaped the evolution of the Universe. The other factor is dark energy, the pervasive but mysterious phenomenon that is causing cosmic expansion to accelerate. Crucial data on both factors can be derived from a three-dimensional survey of the surrounding Universe that the LSST is well suited to provide.

“Increasingly, we are able to ask new questions by querying huge databases.”

"Increasingly, we are able to ask new questions by querying huge databases," says Tyson. "The key is to populate those databases with calibrated and trusted data."

The LSST is expected to help US astronomers regain some momentum in ground-based astronomy at a time when European facilities have begun to dominate the field. To that end, the survey stresses the need for a swift decision on which of two competing mega-telescopes should receive federal funding.

The proposed Thirty Meter Telescope, on Mauna Kea in Hawaii, and the Giant Magellan Telescope, envisioned for Las Campanas in Chile, are both supported by significant private money, and would have many times the light-gathering power and resolution of today's largest telescopes. Realistically, only one project will receive federal funds, which the survey recommends should be between $257 million and $350 million. Given that Europe has also prioritized a 42-metre telescope, the European Extremely Large Telescope, a choice needs to be made now to avoid a counterproductive stalemate.

In space, the decadal survey proposes the Wide Field Infrared Survey Telescope (WFIRST), a 1.5-metre instrument that will map the whole sky at near-infrared wavelengths. Such data would contain subtle clues — in the distance–brightness relationships of supernovae, the bending of light (microlensing) from background galaxies and the three-dimensional clustering of matter in space — that can be used to independently measure dark energy.

WFIRST is effectively a rebranding of the Joint Dark Energy Mission, a NASA–DOE collaboration. The new name, says one survey reviewer, signals that the $1.6-billion telescope is not a one-trick pony, but a way of serving other astronomical needs as well. The survey committee stresses, for example, that WFIRST could spot microlensing events caused when exoplanets — planets outside our Solar System — pass briefly in front of background stars in the Milky Way. Although the method is unsuitable for studying individual solar systems in detail, it promises, through its sheer number of discoveries, to provide an unbiased sample of the kinds of planetary systems prevalent in the Galaxy.

Sunday, August 22, 2010

Topological insulators and one of its creators

Below is the link of this talk:

http://www.youtube.com/watch?v=Qg8Yu-Ju3Vw&NR=1

And Below is the link of an conversation with the talker:

http://www.youtube.com/watch?v=d5s6nZtPAHE&feature=related

Thursday, August 19, 2010

The interrelation between CuO2 layers within the same unit cell

Mostly I confine my current research to single-CuO2-layer cuprates such as LSCO or NCCO, although it is also my desire to pay attention to multi-layer ones at the same time. I feel the most mysterious physics should already exist in a single isolated layer, whereas inter-layer correlations are only of secondary effects. A recent publication [1] showed an interesting aspect of inter-layer interrelations. This was discovered in Bi2Sr2Ca2Cu3O101d (Bi2223), which boasts of three intimately related CuO2 layers within a unit cell. They found that: Tc increases to a maximum and the goes down to a trough, but eventually rises to a new peak surpassing the earlier one, as the pressure is varied monotonically. They interpreted this finding in terms of two energy scales: the paring energy and the phase coherence energy, together with the observation that hole concentration is enhanced by pressure. All this is summarized in the FIG.

But it should be useful to consider a different picture: suppose at low concentration, all three layers are underdoped SC; as the concentration increases, the holes may transfer from the inner layer to the two outer layers so that the inner layer is still underdoped while the outer ones may be in optimum doping (the first maximum is reached); as the concentration increases further, the extra holes can hardly be deployed to increase Tc [2], until the point where the extra holes going to the inner is favorable; now Tc rises again. This picture could also explain the observed Tc trend. However, a quantitative study is desired. The simplest model consists of BCS-type Hamiltonian for each layer and inter-layer tunneling.

[1]Vol 466| 19 August 2010| doi:10.1038/nature09293;

[2] If the same amount of holes is added to underdoped or the optimum doped materials, in which case the energy will be reduced more ?

A short comment on the need for 3-band models

(d) Three band model. Since the copper–oxygen layer involves one copper and two oxygen per unit cell (excluding the apical oxygen), the minimal microscopic electronic model requires a dx2−y2 Cu orbital and two oxygen p orbitals. This is called the three band model [130, 131]. Various cluster calculations indicate that the low energy physics (below a scale of t ≈ 4 meV) can be adequately described by a one band Hubbard model [4]. Over the years this has become the majority view, but there are still workers who believe that the three band model is required for superconductivity. I think the original motivation for this view came from a period of nearly ten years when much of the community believed that the pairing symmetry is s-wave. It certainly is true that a repulsive Hubbard model cannot have an s-wave superconducting ground state. Three band models with further neighbour repulsion were introduced to generate the requisite effective attraction. The s-wave story was overturned once and for all by phase sensitive experiments in 1994 but some of the three band model proponents persevered. In particular, Varma introduced the idea of intra-cell orbital currents, i.e. a current pattern flowing between the Cu–O and O–O bonds as a model for the pseudogap [132]. This has the virtue of leaving the unit cell intact and this kind of order is very difficult to detect. With such a complicated model it is difficult to make a convincing case based on theory alone and a lot of attention has been focused on experimental detection of time-reversal symmetry breaking or spontaneous moments due to orbital currents. Unfortunately, the expected signals are very small and can easily be contaminated by a small amount of minority phase with AF order. Up to now there are no reliable results in support of this kind of orbital currents.

I don't quite consent with him on these remarks. For me, what urges people to take into account the O orbital is the qualitative differences between p-type cuprates and n-type cuprates. Were t-J model adequate for both types, such differences could hardly be justified. Especially the formation spin glass in the underdoped regime, in my opinion, can hardly reach compromise with t-J model. Spin glass shows up only in p-type materials. Recent experiments even more clearly showed the importance of O orbital. More detailed reasoning can be found in this work [J. Phys.: Condens. Matter 21 (2009) 075702].

Interview with Professor Anton

Part 1:

http://www.youtube.com/watch?v=o5dNg6pmgPg

Part 2:

http://www.youtube.com/watch?v=kIzMZtQ9NwQ&feature=related

Visualizing relativity theory

http://www.spacetimetravel.org/

(2) Here is a news article illuminating the discovery of dark energy:

http://physicsworld.com/cws/article/indepth/31908

Wednesday, August 18, 2010

Boundary matters: topological insulators

It has become a habit for those who profess in solid state physics to consider a crystal as a periodic array of atoms. In reality, this is, however only an approximation. Any solid is limited by its surfaces, which means the periodicity terminates at these bounds. Despite this, people still in most cases take them as infinite and unbounded, so that exact periodicity can be used to obtain exact solutions of some models. Usually, such solutions indeed provide very good descriptions of the sample, provided the bulk is dominant over the boundaries. One such artificial boundary condition goes under 'Von Karmen periodic condition', which yields Bloch waves.

Nevertheless, surface (not film, [1]) states can display many exotic properties that are not supported by bulky solutions. These properties may be related to, let's say, impurities, dangling bonds, surface tensions, structural reconstructions and et al. Due to these properties, modern computers could be made. As we know, transistors and diodes just make use of the properties of interfaces between two semiconductors.

Surface states have not ceased to surprise people. Some ten years ago, people found a novel type of conducting channels in two dimensional electron gas systems. Such systems under strong magnetic field exhibit the famous quantum Hall effects. It was later suggested that, such Hall states possess edge states that can conduct electricity along the edges of the 2D sample. These states are squeezed out of and split from the insulating bulk states, by magnetic field.

In 2005, Kane and his collaborators suggested that [2], such edge states could exist even without magnetic field. The considered graphene, namely a single graphite layer. In such materials, they guessed, there might be strong spin-orbit coupling. Such couplings can actually play a similar role as a magnetic field, and thus may create edge states. In this case, new complexity arises due to the spin degrees of freedom (see the figure).

All the as-described edge states are 1D objects. Most recently, a kind of 2D edge states was discovered existing in Bi2Te3 compounds. Such compounds have complex crystal structures and strong spin orbit coupling. These states are able to conduct spin and charges along the surface. So, one has this very gorgeous phenomenon: insulating bulk+metallic surface. They are termed 'topological insulators'. Why topology ? Topological properties are the properties that are invariant under continuous transformations of parameter space. In Bi2Te3, that is the number of edge states, which is conserved, however the shape of the sample is changed.

Although something has been learned of these new properties, a realistic and analytically tractable solution still awaits to show up. More experiments need be done to confirm and explore our understanding.

[1]A surface is linked with a bulk, but a film has its own bulk and surface (edges). Interface can be deemed as a special surface.

[2]PRL 95, 226801 (2005)

A missed black hole

A short account of the history regarding quantum criticality

oduction to quantum critical phenomena, such as what happen in heavy fermion metal, magnets, and solids and et al. It has a good list of references in tow, and thus very useful. [NATURE|VOL 433 | 20 JANUARY 2005 |www.nature.com/nature]

oduction to quantum critical phenomena, such as what happen in heavy fermion metal, magnets, and solids and et al. It has a good list of references in tow, and thus very useful. [NATURE|VOL 433 | 20 JANUARY 2005 |www.nature.com/nature]

How the referee works

Refereeing Physics Papers

Anyone who has read some of my postings, especially those smacking the quacks, would have noticed that I emphasized frequently the call for peer-reviewed journals. It is not that I'm in love with them, it is just that from history (at least since the beginning of the 20th century), there have been NO discoveries or ideas that have made significant advancement in the body of knowledge of physics that have not appeared in peer-reviwed journals. This means that for something to make any impact on physics at all, the necessary, but not sufficient criteria is that it must first appear in a peer-reviewed journal, not on someone's webpage, or some e-print repository, or some discussion site. No matter what the claim is, if it hasn't appeared in a peer-reviewed journal, it has ZERO possibility of making ANY dent in the physics body of knowledge. There has been no exception.

The most common misconception about papers in peer-reviewed journals is that, since it has appeared in print, it must be valid. This isn't true, nor is it the aim of peer-reviewed journals. In science, and physics especially, the validity of something cannot be established immediately. Experimental discovery must be repeated, especially by independent parties, for there to be confirmation that such a discovery is real. Theoretical ideas require a slew of experimental verifications and tests for it to be confirmed as a valid description of a phenomenon. So all of these take time, and certainly several more publications. Hence, the "validity" of something isn't guaranteed just because it appeared in print.

What is more relevant though is that a paper in a peer-reviewed journal is (i) not written out of ignorance (ii) not obviously wrong (iii) full of enough information for someone else to reproduce either the experiment or the calculation (iv) written in a clear and understandable manner. This is where the referee comes in. The referee's task is to make sure at least those criteria are met (other more prestigious journals such as Nature, Science, and PRL have additional requirements).

Refereeing is done voluntarily. It is part of one's duty to the community, and referees get no compensation of any kind from the journals other than a thank you. You referee in your area of expertise, so the journals will send you papers in your area, and often, written by your competitors. I think journals like the Physical Reviews keep track of a referee's activities, how prompt he/she is at responding, how much "complaints" that referee has received, etc. etc... (these are just my guess upon my casual conversations with a few of the editors). While the editors know who is refereeing what, the referees themselves are anonymous to the authors of the paper. So for better or for worse, the referees are free to criticize the paper being considered without revealing their identities (the editors do sometime intervene if referees get out of hand with their reviews, but I do not know how often this occurs. It certainly hasn't occured to me).

To be considered as a referee for a journal, one has to demonstrate one's expertise in a specific area of physics. Most often, it is by publishing in that particular journal. That's the most direct way of establishing one's reputation and expertise. I think that some journals such as the Phys. Review do keep track of a referee's publication track record (again, another guess).

My involvement in refereeing physics papers began when I was a postdoc. I already had published several papers in PRL, PRB, Nature, and Physica C at that time. One day, my postdoc supervisor contacted me and told me that he was going to refer the paper that he had been asked to review to me because, in his words, "you know more about this than I do". So he formally informed the editors of PRL to send the manuscripts to me to review. That's how I got onto the Physical Review referee database and I've been reviewing papers for them ever since. I also am the referee for J. Elect. Spect. because I attended a conference on Electron spectroscopy and refereed a proceeding paper that appeared in that journal.

My approach to refereeing a paper involves several steps. The first and foremost is, is the paper clear in what it is trying to convey? At this stage, I really don't care yet if it's accurate, valid, or consistent, or even possibily of any importance. I want to know if they have been clear on what they're trying to say. A confusing paper does more harm towards the advancement of knowledge than a wrong paper. I want to make sure the authors has made as clear as possible what they are trying to convey.

The second step is to see if the result is obviously wrong or impossible. I tend to referee experimental work, so the capabilities of the experimental setup is always something I pay attention to. To claim to be able to detect something beyond the resolution of a particular instrument would be a warning bell, for example. So trying to figure out if there are anything obvious would be the most logical step.

Thirdly, have the authors described in full their work to allow for someone else to reproduce? At the very least, they should include a citation of their experimental details if they have appeared elsewhere.

Fourth, are the results being presented clear and "complete". Often, a referee can make suggestions to further bolster the authors result by including either additional data, or even a new experimental measurement (this is the sign of a good referee).

Fifth, is the result consistent and/or contradictory to already-published theories and/or experiments? This is where a referee really comes in, because the referee is expected to know the state of knowledge of that particular field. This means being aware of all the possible papers in that field of study so that something that is claiming contradictory results must address all that have been published. Thus, if a paper is claiming something contradictory, it MUST address this contradiction. Why is it different? Are the previous papers wrong? Is this a different angle of attack? Is it consistent with established ideas? Is this new? etc.. etc. This usually takes the most effort out of a referee because he/she sometime may have to do some "homework" to double check facts and what have already been published or reported.

Finally, for journals such as PRL, Nature, and Science, there is an additional requirement that the paper just have wide-ranging impact beyond just that narrow field of study. While most papers in peer-reviewed journals reports on new ideas and results, these 3 journals require that the paper contains significantly important information that scientists from other areas too may find informative. So this is a tougher criteria to fulfill.

Note that for many journals, a manuscript being considered is often sent to more than 1 referee. It is not uncommon for PRL, Nature, and Science to send a manuscript to 3, even 4 referees. All of them must approve that paper for it to be published. So one can imagine how difficult it is to publish in these journals.

While science is still a human endeavor, peer-reviewed journals are still the best means of communication we have so far in advancing legitimate work.

Zz.

Tuesday, August 17, 2010

First we have the issue of the debate about the differences between Economics and Econophysics. Then there's an argument on why Economics will never be like Physics. Now comes an argument on why Econophysics will never work!The markets are not physical systems. They are systems based on creating an informational advantage, on gaming, on action and strategic reaction, in a space that is not structured with defined rules and possibilities. There is feedback to undo whatever is put in place, to neutralize whatever information comes in.

The natural reply of the physicist to this observation is, “Not to worry. I will build a physics-based model that includes feedback. I do that all the time”. The problem is that the feedback in the markets is designed specifically not to fit into a model, to be obscure, stealthy, coming from a direction where no one is looking. That is, the Knightian uncertainty is endogenous. You can’t build in a feedback or reactive model, because you don’t know what to model. And if you do know – by the time you know – the odds are the market has changed. That is the whole point of what makes a trader successful – he can see things in ways most others do not, anticipate in ways others cannot, and then change his behavior when he starts to see others catching on.

It will be interesting to see if the writer has actually read Christophe Schinckus AJP's paper (my guess is he hasn't), and if Schinckus has a response to this.

The question whether something works or is valid in field of studies such as economics, social science, politics, etc. is rather interesting. In the physical sciences, there's usually no ambiguity because we can either test it out, or go look for it. Something works when what it predicts can be observed and reproducible. So how does one determines if the various principles and models in economics, social science, politics, etc. are valid and do work? Simply based on previous data and observation that somehow fit into the model? But as this writer has stated, there's no model to fit because the model keeps changing due to such model-changing feedback. It appears that the whole field is more based on "intuition" than on any rational reasoning.

If that's the case, then it truly isn't a science but more of an art. So why do people who graduate with a degree in economics, social "science", or politics, sometime get a "Bachelor of Science" degree?

Zz.

Monday, August 16, 2010

One more evidence for CP violation ??

rge deviation.

rge deviation.FIG. 1: (Top) Proton-antiproton (p ¯ p) collisions sometimes create a B0s -¯B0 s pair among the spray of new particles. Subsequent decays may then produce muons: a m+ from B0 s , or a m from ¯B0 s . Due to the possibility for a ¯B0 s to “oscillate”— transmute—into a B0 s , and vice versa, muons of the same electric charge can be produced. Finally, comparing m+m+ (as shown) to mm (the CP-mirrored process, with matter and antimatter inverted) reveals if these oscillations display a CP asymmetry. (Bottom) The weak interaction, mediated byW bosons, can change any quark with electric charge 1/3 (d, s, b quarks) into any quark with electric charge +2/3 (u, c, t quarks) and vice versa. As time flows from left to right in the diagram above, the successive exchange of two W bosons allows a ¯B0 s to oscillate into its antimatter partner, a B0 s . The standard model also predicts a small CP asymmetry in these oscillations. It would not be surprising for physics beyond the standard model to include new particles which can mediate the oscillations, and hence alter the predicted asymmetry, allowing us to indirectly discern their existence. (Illustration: Carin Cain)

Sunday, August 15, 2010

The role of earth: sink or source ?

One key to accurately predicting future levels of atmospheric carbon dioxide (CO2) is understanding how land and atmosphere exchange CO2. Each year, photosynthesizing land plants remove (fix) one in eight molecules of atmospheric CO2, and respiring land plants and soil organisms return a similar number. This exchange determines whether terrestrial ecosystems are a net carbon sink or source. Two papers in this issue contribute to understanding the land-atmosphere exchange by elegantly analyzing rich data sets on CO2 fluxes from a global network of monitoring sites. On page 834, Beer et al. (1) estimate total annual terrestrial gross primary production (GPP) in an approach more solidly based on data than previous simple approximations. On page 838, Mahecha et al. (2) assess how ecosystem respiration (R) is related to temperature over short (week-to-month) and long (annual) time scales, and find a potentially important but difficult-to-interpret relationship.

- REPORTS

- Christian Beer, Markus Reichstein, Enrico Tomelleri, Philippe Ciais, Martin Jung, Nuno Carvalhais, Christian Rödenbeck, M. Altaf Arain, Dennis Baldocchi, Gordon B. Bonan, Alberte Bondeau, Alessandro Cescatti, Gitta Lasslop, Anders Lindroth, Mark Lomas, Sebastiaan Luyssaert, Hank Margolis, Keith W. Oleson, Olivier Roupsard, Elmar Veenendaal, Nicolas Viovy, Christopher Williams, F. Ian Woodward, and Dario Papale (13 August 2010)

Science 329 (5993), 834. [DOI: 10.1126/science.1184984]

- REPORTS

- Miguel D. Mahecha, Markus Reichstein, Nuno Carvalhais, Gitta Lasslop, Holger Lange, Sonia I. Seneviratne, Rodrigo Vargas, Christof Ammann, M. Altaf Arain, Alessandro Cescatti, Ivan A. Janssens, Mirco Migliavacca, Leonardo Montagnani, and Andrew D. Richardson (13 August 2010)

Science 329 (5993), 838. [DOI: 10.1126/science.1189587]

Thursday, August 12, 2010

New experiment alluding to the matter antimatter asymmetry

Since the 1960s, physicists have been gathering evidence that neutrinos can switch, or oscillate, between three different flavors — muon, electron and tau, each of which has a different mass. However, they have not yet been able to rule out the possibility that more types of neutrino might exist.

In an effort to help nail down the number of neutrinos, MiniBooNE physicists send beams of neutrinos or antineutrinos down a 500-meter tunnel, at the end of which sits a 250,000-gallon tank of mineral oil. When neutrinos or antineutrinos collide with a carbon atom in the mineral oil, the energy traces left behind allow physicists to identify what flavor of neutrino took part in the collision. Neutrinos, which have no charge, rarely interact with other matter, so such collisions are rare.

MiniBooNE was set up in 2002 to confirm or refute a controversial finding from an experiment at the Liquid Scintillator Neutrino Detector (LSND) at Los Alamos National Laboratory. In 1990, the LSND reported that a higher-than-expected number of antineutrinos appeared to be oscillating over relatively short distances, which suggests the existence of a fourth type of neutrino, known as a “sterile” neutrino.

In 2007, MiniBooNE researchers announced that their neutrino experiments did not produce oscillations similar to those seen at LSND. At the time, they assumed the same would hold true for antineutrinos. “In 2007, I would have told you that you can pretty much rule out LSND,” says MIT physics professor Janet Conrad, a member of the MiniBooNE collaboration and an author of the new paper.

MiniBooNE then switched to antineutrino mode and collected data for the next three years. The research team didn’t look at all of the data until earlier this year, when they were shocked to find more oscillations than would be expected from only three neutrino flavors — the same result as LSND.

Already, theoretical physicists are posting papers online with theories to account for the new results. However, “there’s no clear and immediate explanation,” says Karsten Heeger, a neutrino physicist at the University of Wisconsin. “To nail it down, we need more data from MiniBooNE, and then we need to experimentally test it in a different way.”

The MiniBooNE team plans to collect antineutrino data for another 18 months. Conrad also hopes to launch a new experiment that would use a cyclotron, a type of particle accelerator in which particles travel in a circle instead of a straight line, to help confirm or refute the MiniBooNE results.

Buffer layer with oxygen interstitials promote superconductivity

For y<0, there wont be any interstitials any more and the life is a bit easier.

It is well known that the microstructures of the transition-metal

oxides1–3, including the high-transition-temperature (high-Tc) copper

oxide superconductors4–7, are complex. This is particularly so

when there are oxygen interstitials or vacancies8, which influence

the bulk properties. For example, the oxygen interstitials in the

spacer layers separating the superconducting CuO2 planes undergo

ordering phenomena in Sr2O11yCuO2 (ref. 9), YBa2Cu3O61y (ref. 10)

and La2CuO41y (refs 11–15) that induce enhancements in the transition

temperatures with no changes in hole concentrations. It is also

known that complex systems often have a scale-invariant structural

organization16, but hitherto none had been found in high-Tc materials.

Here we report that the ordering of oxygen interstitials in the

La2O21y spacer layers of La2CuO41y high-Tc superconductors is

characterized by a fractal distribution up to a maximum limiting

size of 400 mm. Intriguingly, these fractal distributions of dopants

seem to enhance superconductivity at high temperature.

[1]Vol 466| 12 August 2010| doi:10.1038/nature09260

Wednesday, August 11, 2010

Plasmon enhanced microalgal growth

Photoactivity of green microalgae is nonmonotonic across the electromagnetic spectrum. Experiments on Chlamydomonas reinhardtii green alga and Cyanothece 51142 green-blue alga show that wavelength specific backscattering in the blue region of the spectrum from Ag nanoparticles, caused by localized surface plasmon resonance, can promote algal growth by more than 30%. The wavelength and light flux of the backscattered field can be controlled by varying the geometric features and/or concentration of the nanoparticles. © 2010 American Institute of Physics.

doi:10.1063/1.3467263

Linus Pauling's triple lextures many years ago

(2)http://www.youtube.com/watch?v=wKthfJEkMT0&feature=channel;

(3)http://www.youtube.com/watch?v=uqg7_kiOFhY&feature=channel

Limit on the speed of computers

Tuesday, August 10, 2010

Resource Letter PTG-1: Precision Tests of Gravity

This resource letter provides an introduction to some of the main current topics in experimental tests of general relativity as well as to some of the historical literature. It is intended to serve as a guide to the field for upper-division undergraduate and graduate students, both theoretical andexperimental, and for workers in other fields of physics who wish learn about experimental gravity. The topics covered include alternative theories of gravity, tests of the principle of equivalence, solar-system and binary-pulsar tests, searches for new physics in gravitational arenas, and tests of gravity in new regimes, involving astrophysics and gravitational radiation.

New surge in topological insulators

[2]PHYSICAL REVIEW B 82, 081305 R 2010

[3]Physics 3, 66 (2010)

The prediction [1] and experimental discovery [2, 3] of a class of materials known as topological insulators is a major recent event in the condensed matter physics community. Why do two- and three-dimensional topological insulators (such as HgTe/CdTe [2] and Bi2Se3 [3], respectively) attract so much interest? Thinking practically, these materials open a rich vista of possible applications and devices based on the unique interplay between spin and charge. More fundamentally,

there is much to enjoy from a physics point of view, including the aesthetic spin-resolved Fermi surface topology [3], the possibility of hosting Majorana fermions (a fermion that is its own antiparticle) in a solid-state system [4], and the intrinsic quantum spin Hall effect, which can be thought of as two copies of the quantum Hall effect for spin-up and spin-down electrons [5]. Now, an exciting new addition to the above list comes from two teams that are reporting the first experimental observation of quantized topological surface states forming Landau levels in the presence of a magnetic field. The two papers - one appearing in Physical Review Letters by Peng Cheng and colleagues at Tsinghua University in China, and collaborators in the US, the other, appearing as a Rapid Communication in Physical Review B, by Tetsuo Hanaguri at Japan’s RIKEN Advanced Science Institute in Wako and scientists at the Tokyo Institute of Technology - pave the way for seeing a quantum Hall effect in topological insulators.

Dcoding the colors of butterfly wings

1/f optimal information transportation

In living neural networks, the connection between function and information transport is studied with experimental techniques of increasing efficiency [1] from which an attractive perspective is emerging; i.e., these complex networks live in a state of phase transition (collective, cooperative behavior), a critical condition that has the effect of optimizing information transmission [2]. From the studies of complex networks, it is evident that the statistical distributions for network properties are inverse power laws and that the power-law index is a measure of the degree of complexity. Intimate connections exist between neural organization and information theory, the empirical laws of perception [3], and the production of 1=f noise [4], with

the surprising property that 1=f signals are encoded and transmitted by sensory neurons with higher efficiency than white noise signals [5]. Although 1=f noise production is interpreted by psychologists as a manifestation of human cognition [6], and by neurophysiologists [7] as a sign of neural activity, a theory explaining why this form of noise is important for communication purposes does not exist yet. The well known stochastic resonance phenomenon [8] describes the transport of information through a random medium, obeying the prescriptions of Kubo linear response theory (LRT) [9], being consequently limited [10] to the stationary equilibrium condition. There are many complex networks that generate 1=f noise and violate this condition:

Two relevant examples are blinking quantum dots [11] and liquid crystals [12]. The non-Poisson nature of the renewal processes generated in these examples [13] is accompanied by ergodicity breakdown and nonstationary behavior [14].

Bubbles to penetrate cell membranes

Figure caption: The timed expansion and collapse of two bubbles creates a liquid jet that can penetrate a fine hole in the membrane of a cell. From left to right: A laser (green circle) focused inside a water bath locally vaporizes the liquid, creating an expanding bubble (light blue). Just after the first bubble reaches its maximum size, a second laser (red circle)generates another bubble. As the second bubble expands and the first bubble collapses, a rush of liquid forms along the vertical line (pink arrow) between the two, creating a high-speed liquid jet that accelerates toward the cell with enough force to penetrate the membrane.

Sunday, August 8, 2010

Hints on the scarcity of anti-matter

Saturday, August 7, 2010

Simulating metric signature effects with metamaterials

We demonstrate that the extraordinary waves in indefinite metamaterials experience an effective metric signature. During a metric signature change transition in such a metamaterial, a Minkowski space-time is created together with a large number of particles populating the space-time. Such metamaterial models provide a tabletop realization of metric signature change events suggested to occur in Bose-Einstein condensates and quantum gravity theories.

real-time tracking the motions of electrons

doi:10.1038/nature09212

Attosecond technology (1 as=10−18 S) promises the tools needed to directly probe electron motion in real time. These authors report attosecond pump–probe measurements that track the movement of valence electrons in krypton ions. This first proof-of-principle demonstration uses a simple system, but the expectation is that attosecond transient absorption spectroscopy will ultimately also reveal the elementary electron motions that underlie the properties of molecules and solid-state materials.

Friday, August 6, 2010

Thursday, August 5, 2010

Through the years leading to the discovery of BCS theory

Almost half a century passed between the discovery of superconductivity by Kammerlingh Onnes and the theoretical explanation of the phenomenon by Bardeen, Cooper and Schrieffer. During the intervening years the brightest minds in theoretical physics tried and failed to develop a microscopic understanding of the effect. A summary of some of those unsuccessful attempts to understand superconductivity not only demonstrates the extraordinary achievement made by formulating the BCS theory, but also illustrates that mistakes are a natural and healthy part of the scientific discourse, and that inapplicable, even incorrect theories can turn out to be interesting and inspiring.

http://arxiv.org/ftp/arxiv/papers/1008/1008.0447.pdf

Wednesday, August 4, 2010

nodes found of the paring gap in pnictides

The thermal conductivity of the iron-arsenide superconductor Ba Fe1−xCox 2As2 was measured down to 50 mK for a heat current parallel c and perpendicular a to the tetragonal c axis for seven Co concentrations from underdoped to overdoped regions of the phase diagram 0.038 x 0.127 . A residual linear term c0 /T is observed in the T→0 limit when the current is along the c axis, revealing the presence of nodes in the gap. Because the nodes appear as x moves away from the concentration of maximal Tc, they must be accidental, not imposed by symmetry, and are therefore compatible with an s state, for example. The fact that the in-plane residual linear term a0 /T is negligible at all x implies that the nodes are located in regions of the Fermi surface that contribute strongly to c-axis conduction and very little to in-plane conduction. Application of a moderate magnetic field e.g., Hc2 /4 excites quasiparticles that conduct heat along the a axis just as well as the nodal

quasiparticles conduct along the c axis. This shows that the gap must be very small but nonzero in regions of the Fermi surface which contribute significantly to in-plane conduction. These findings can be understood in terms of a strong k dependence of the gap k which produces nodes on a Fermi-surface sheet with pronounced

c-axis dispersion and deep minima on the remaining, quasi-two-dimensional sheets.

PHYSICAL REVIEW B 82, 064501 2010

Phonon electron coupling in HTSC as seen recently

To observe the dynamics of electrons and phonons in a high-Tc superconductor, Pashkin et al. used a pump-probe technique. (Top) The first laser pulse excites an electron above the superconducting gap, while a second pulse in the THz range, reflected from the sample at variable delay τ, probes the gap. As time progresses, the superconducting gap closes and then opens again as indicated in the THz reflectivity. (Bottom) Simultaneously with the electron excitation, lattice vibrations of oxygen atoms in the YBCO are probed by watching how the center frequency and asymmetry of the line shape of the reflected light changes with time. Surprisingly, the electrons and photons are excited and decay on the same time scale, suggesting that the dynamics of the two species are to some degree independent.Phys. Rev. Lett. 105, 067001 (2010)

F A Wilczek in this interview

He is good-natured, funny and thought to be among the smartest men in physics: Frank A. Wilczek, 58, a professor at the Massachusetts Institute of Technology who was one of three winners of the 2004 Nobel Prize in Physics. The award came for work Dr. Wilczek had done in his 20s, with David Gross of Princeton, on quantum chromodynamics, a theoretical advance that is part of the foundation of modern physics. Here is an edited version of two conversations with Dr. Wilczek, in October and this month.

Find the link here

[PHYSICAL REVIEW E 82, 021903 2010].

[PHYSICAL REVIEW E 82, 021903 2010].